| SQL Server | Postgres |

| bigint | numeric(20,0) |

| binary | bytea |

| bit | numeric(1,0) |

| char | character(10) |

| date | date |

| datetime | timestamp without time zone |

| datetime2 | timestamp(6) without time zone |

| datetimeoffset | timestamp(6) with time zone |

| decimal | numeric(18,0) |

| float | double precision |

| geography | character varying(8000) |

| geometry | character varying(8000) |

| hierarchyid | character varying(8000) |

| image | bytea |

| money | numeric(19,4) |

| nchar | character(10) |

| ntext | text |

| numeric | numeric(18,0) |

| nvarchar | character varying(10) |

| real | double precision |

| smalldatetime | timestamp without time zone |

| smallint | numeric(5,0) |

| smallmoney | numeric(10,4) |

| sql_variant | character varying(8000) |

| sysname | character varying(128) |

| text | text |

| time | time(6) without time zone |

| timestamp | character varying(8000) |

| tinyint | numeric(5,0) |

| uniqueidentifier | uuid |

| varbinary | bytea |

| varchar | character varying(10) |

| xml | xml |

Sunday, November 5, 2017

SQL Server and Postgres Datatypes

Monday, June 20, 2016

Migrate SQL Server Database to AWS (RDS) or Azure using SSIS

Migrate SQL Server Database to AWS (RDS) or Azure using SSIS

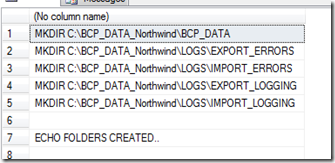

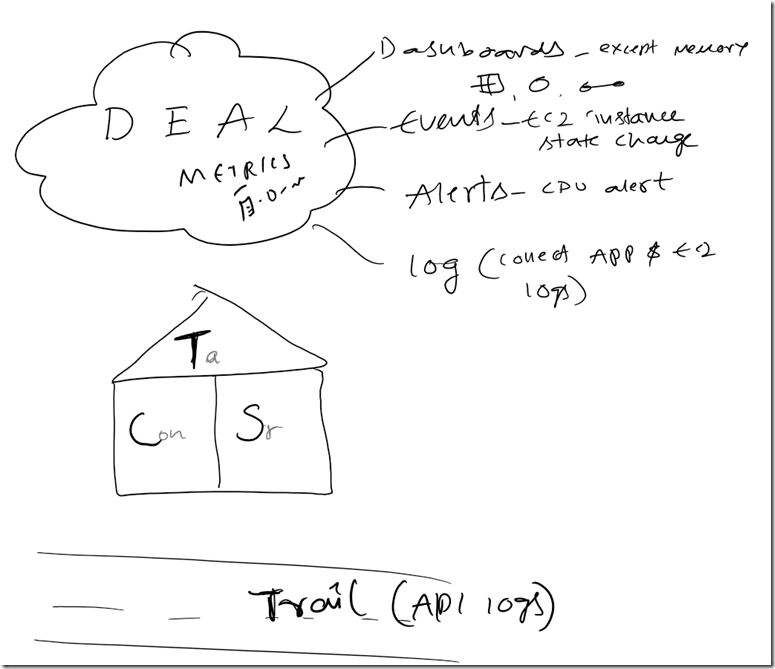

| If backup and restore is not an option to migrate or copy the whole database which is not an option, specially to migrate managed database services like AWS RDS /Azure or any Environment which has restrictions to restore backups needs Import/Export mechanisms to migrate the data. Both these techniques are very familiar to most of the DBAs, here I am highlighting the problems SSISExport Wizard: 1. Identity Insert should be ON for all the tables which has identity key property, Its very tedious manual process to identify which tables has this property and manually enable them in each data flow. 2. Keep Nulls should be on the tables with default values. E.g.: if the table, column which allows nulls and has a date data type with default value as getdate() then after exporting the data it inserts the date values instead nulls, unless the SSIS dataflow destination property option FastLoadKeepNulls is checked 3. fast load options are not set by default; it has to be set manually for the property sFastLoadOptionsBatchCommit 4. TABLOCK which is not enable by default 5. ROWS_PER_BATCH is not configured by default Above 5 settings have to be done manually. Without these the package would fail or perform slowly How it works: Note: This has been tested on SQL Server 2008 EE. If this doesn’t work in your environment, then modify the key strings as per the release. Steps: 1. Run the Export export data wizard and save the package to C:\TEMP\northwind.dtsx 2. copy the below vbscript code to a file in C:\TEMP\SetSSIS.VBS 3. Edit the Vbscript, Line 5 "filePath = "<ssis file path>" 4. run the vb script 5. it creates a new package with new name as <PKG_NAME>.NEW 6. open the package in BIDS 7. run the package

BCP Check the below link which is using BCP to migrate the Data. |

Monday, February 8, 2016

Friday, August 7, 2015

Wednesday, August 5, 2015

Tuesday, August 5, 2014

Sunday, January 5, 2014

Featured Post

SQL Server AWS Migration BCP

stored procedure to generate BCP scritps to migrate the SQL Server database. Developed this stored procedure on my labs to simulate t...

-

query execution in the production taking long time one of the query in the production taking almost 40 minutes instead seconds where it us...

-

Pivot without Aggregating with consistent and inconsistent formats: One of the most common tasks which I came across at least once in almo...

-

SQL Server Data Masking : Download code DataMasking.sql Masking data can be done through updating the confidential information in t...

![clip_image002[6] clip_image002[6]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEjTAhnTqKvAZMPABcXl5MYyV4ybHsYrELHo3v6Wx-aLu4WMYvoPAK_DVymGF513j1o5ONkbjrZCl0jboqsN2u0LKZ9rRnqRsh52p8_LPmI67ERmhsvEOci9Ogy11OS450R6ibnD3UiW2BoJ/?imgmax=800)

![clip_image004[6] clip_image004[6]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEh9Lfnyhet3A-WrmizeJppKnpW9_iXDrfQ0hXd3RBsvEYgqyD1AWQgWhw52B1uZrL_scxwdoadF5ZPRv0tS_hfuOAPm7Kbc5VebZ1gvC6p39xUNqzwG60tCijXPqS-EiLRPUcMSSXt80J9C/?imgmax=800)

![clip_image001[5] clip_image001[5]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEgvdgMPshsz7pBgUXfGJJ8Lm7uVXtUrwB0UjD9Mun9BJ1DJyhsJz1-6OVcW5mdNfNbfVPrqh8M07Py_53nNXykM4OgyzHivlt5Uhht-0U_g9qJbXagevuHmrSaSIBKVvfqyIvrFkOO1rrXQ/?imgmax=800)

![clip_image001[5] clip_image001[5]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEjV58NpXqXf14NnWK9y86qkhHyuPraPQ_vuPknegJxIrToKj2-rjAKjTf6usJRKapF42c3qaBu4xcWnkKbnojG8pb2tHFI_ygHzKrhh2QQhoE2Ld1kzt96fiss_jvU2tfuZL0Hgf_NX2sLm/?imgmax=800)